L-HAWK: A Controllable Physical Adversarial Patch Against a Long-Distance Target

Authors: Taifeng Liu, Yang Liu, Zhuo Ma, Tong Yang, Xinjing Liu, Teng Li, Jianfeng Ma

Class: EE 7700 ML for CPS

Instructor: Dr. Xugui Zhou

Presented By: Group 4 on 11/04/2025

Summarized By: Group 3

SUMMARY:

L-HAWK: A Controllable Physical Adversarial Patch Against a Long-Distance

Target (NDSS 2025) introduces a new kind of laser-triggered physical attack on

autonomous vehicles (AVs).

Conventional adversarial patches fool AV vision models but are always active

and affect every vehicle nearby. L-HAWK overcomes this by using a printed

patch that stays harmless until a laser signal activates it,

allowing attackers to target a specific vehicle at up to 50 meters.

The authors propose an asynchronous learning framework that jointly optimizes both the patch and laser parameters under real-world noise, distances, and lighting. Experiments on object detectors (YOLO, Faster-R-CNN) and classifiers (VGG, ResNet, Inception) show attack success rates over 90%, outperforming previous methods by more than 50% and extending attack distance sevenfold.

Key contributions include:

● A controllable long-range physical adversarial patch activated by laser signals.

● A new trigger modeling and joint optimization method combining physical and digital learning.

● Empirical validation in both digital and physical setups with high robustness.

Limitations involve dependency on camera frame timing, safety constraints during testing, and reduced stealth under night or close-range conditions.

Slide - 1: Title Slide

This is the introduction slide of the presentation.

Slide - 2: Overview

This slide shows the highlights of the presentation and what are the things that are going to be discussed.

Slide - 3: Part 1

This Slide contains the name and Authors of the presentation and the venue where it was presented.

Slide - 4: L-Hawk

In this slide, we see the description of the title and the general idea of this study.

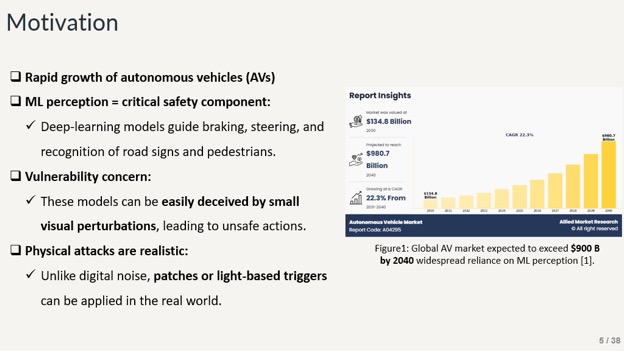

Slide - 5: Motivation

Here, we can see the motivation of this work and why it is important. ML models have been used for perception models and in safety critical scenarios. It can be used in autonomous vehicles and in different other sectors. These types of models are vulnerable to physical perturbations and can be fooled which can be dangerous. There are different forms of patch attack, one can generate patches digitally and by means of a physical method.

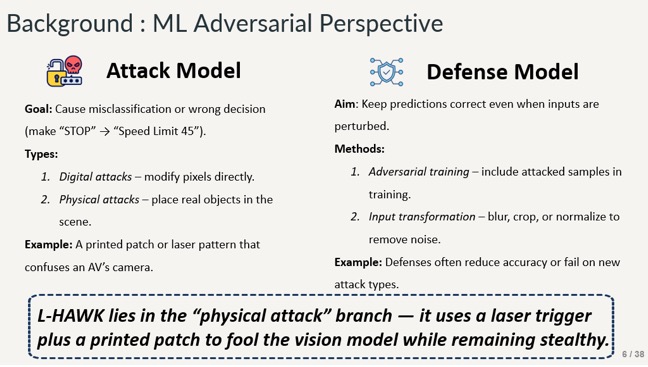

Slide - 6: Background

Here we can see the attack and defense models which highlight the types of attacks and methods of defense as well as examples and where L-Hawk falls in place.

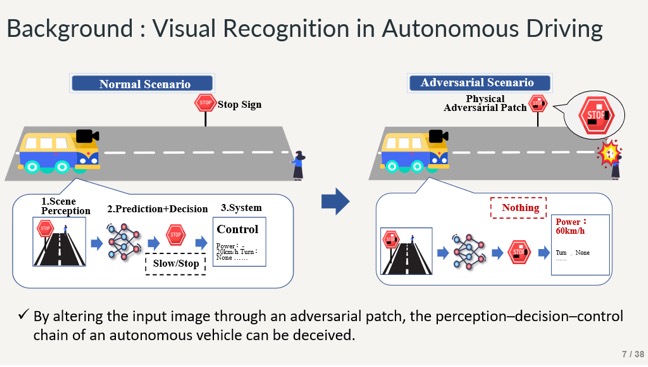

Slide - 7: Background

This slide shows the visual recognition model in Autonomous Driving and how it can be fooled using Adversarial Patches. These patches can be generated physically or can be generated using adversarial attacks on the Neural network.

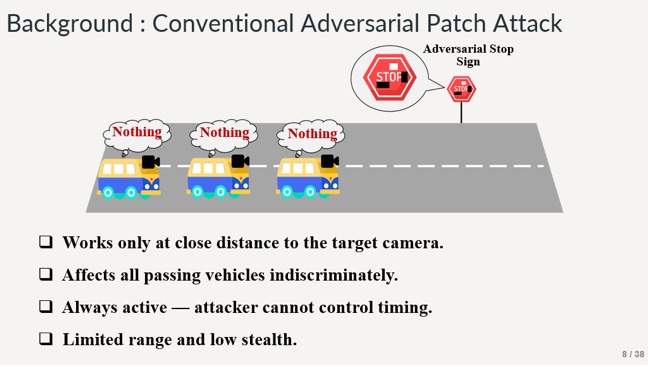

Slide - 8: Background

In this slide, we can see the conventional adversarial patch attack that is generated on stop signs. It affects all the passing vehicles that are trying to pick up stop sign signals from camera.

Slide - 9: Research Question

This is the main research question that this paper is trying to solve.

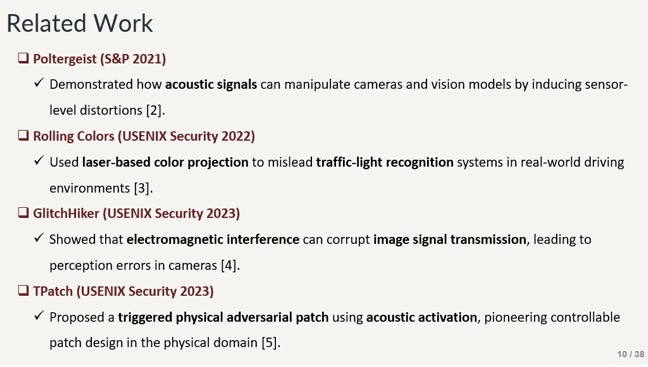

Slide - 10: Related work

This slide presents several existing studies addressing this research question and the specific problems they aimed to solve. These works explore the use of acoustic signals, laser-colored signals, electromagnetic interference, and triggered physical adversarial patch methods to attack autonomous driving models.

Slide - 11: Related Work

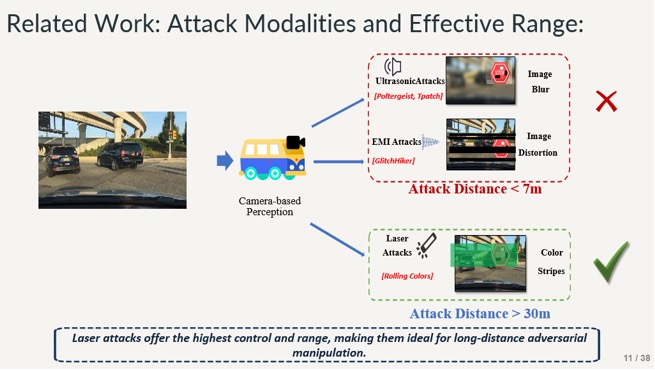

This work proposes a laser-based attack that obstructs the vehicle’s camera, preventing it from capturing the correct road-sign image. It also shows that the laser method outperforms ultrasonic attacks because it remains effective at longer ranges.

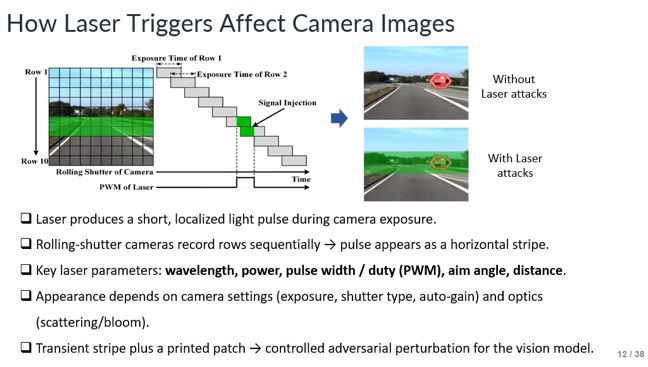

Slide - 12: How Laser Triggers Affect Camera Images

This is a key slide explaining how the laser can be controlled to obscure the specific pixels that contain the object in the camera’s field of view. During camera exposure the laser emits short, localized pulses; because rolling-shutter sensors record rows sequentially, those pulses can affect particular image rows. Controllable laser parameters include wavelength, power, pulse width, aim angle, and distance, while the resulting appearance also depends on the camera settings.

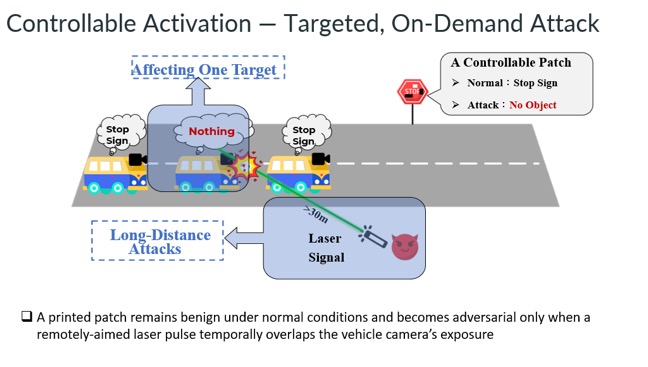

Slide - 13: Controllable Activation

Here we can see how the laser can selectively affect vehicles when the object (road sign) appears in their camera exposure.

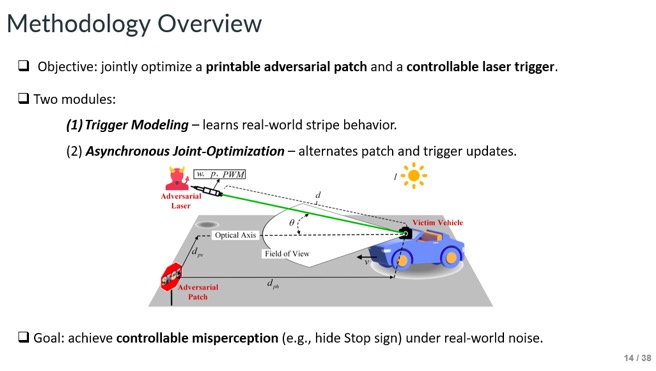

Slide - 14: Methodology Overview

This slide shows the paper’s primary goal: to optimize a printable adversarial patch and a controllable laser trigger so the attack can be precisely controlled.

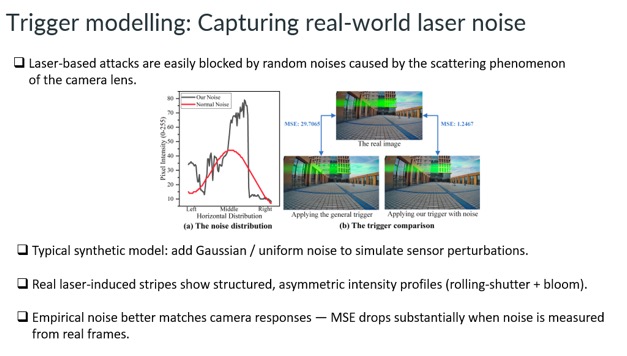

Slide - 15: Trigger modelling

This slide explains how real-world laser noise differs from synthetic noise used in simulations. Real laser-induced patterns are structured and asymmetric due to rolling-shutter and lens scattering effects. Modeling this empirical noise produces camera responses much closer to real images, significantly reducing MSE compared to generic noise models.

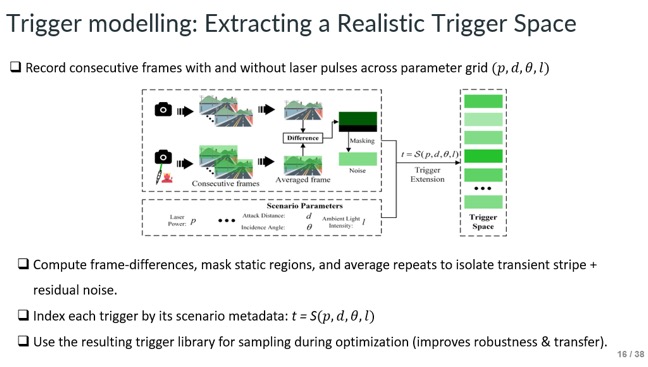

Slide - 16: Trigger modelling

They build a library of real laser triggers by recording frames across power/distance/angle/light, isolating transient stripes via frame-differences and masking, and indexing each trigger for robust sampling during optimization.

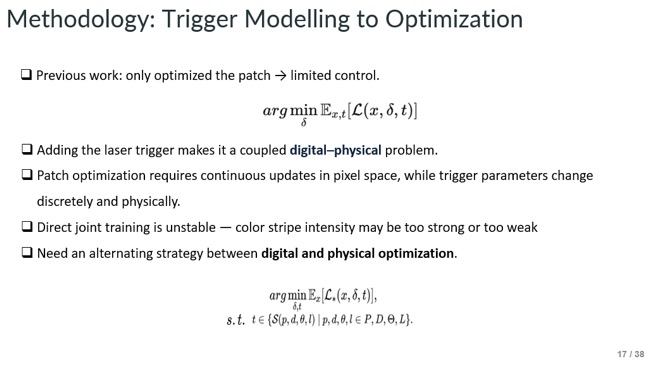

Slide - 17: Methodology

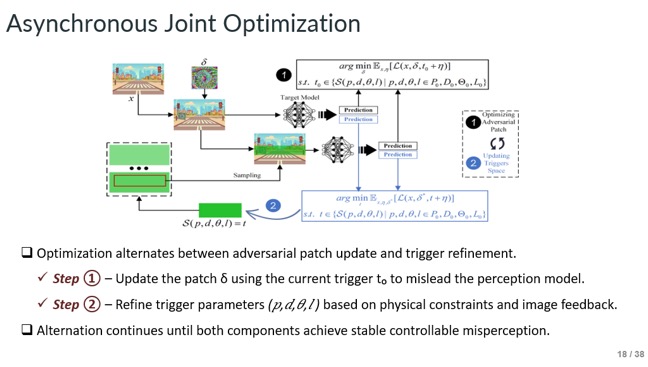

This slide explains the core challenge: jointly optimizing a printable adversarial patch (continuous, pixel-space) and a controllable laser trigger (discrete, physical) creates a coupled digital and physical problem that is unstable under direct joint training. The solution is an alternating optimization strategy that updates the digital patch and the physical trigger separately while constraining triggers to the measured trigger space.

Slide - 18: Asynchronous Joint Optimization

Here we can see how to update the digital patch with the current trigger, then refine trigger parameters, and repeat until both converge to a stable, controllable attack.

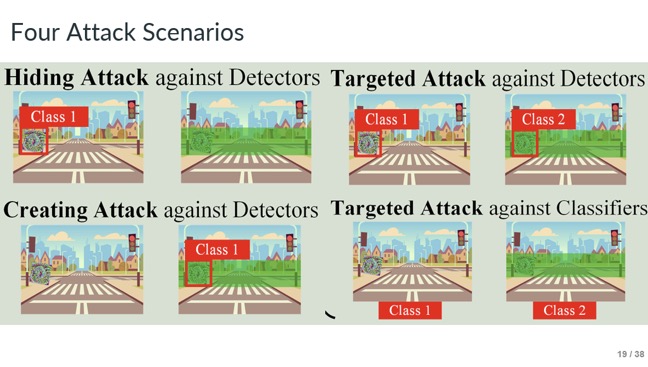

Slide - 19: Four Attack Scenarios

Here we can see four types of attacks: hiding attack, creating attack, targeted attack against detector and targeted attack against classifier. We will know about all of this in the later description.

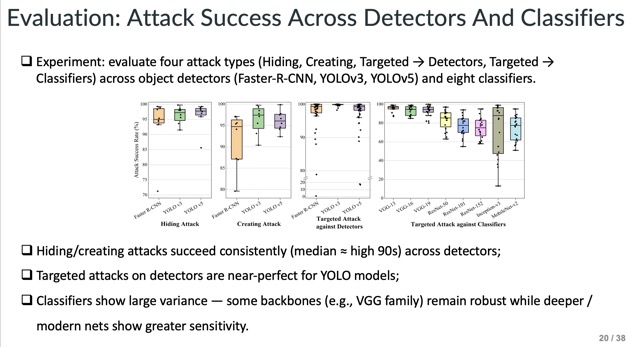

Slide - 20: Evaluation

The evaluation involves the main experiment setup which evaluates four attack types and shows high attack success rates (≈90–99%) across multiple detectors and classifiers for different attack types.

Slide - 21: Attack Robustness I

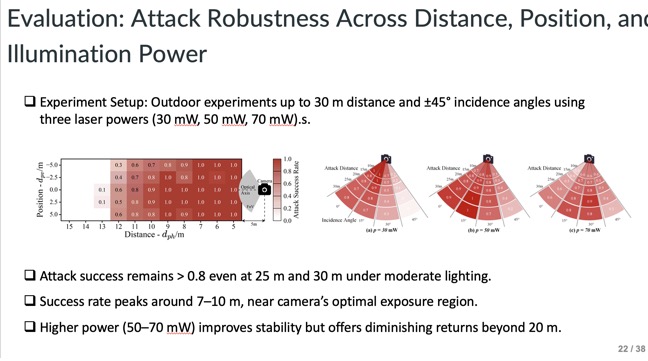

They showed video demonstrations in this slide of the attack testing how distance, position, and lighting affect the attack. They demonstrate that L-HAWK remains effective beyond 25m with strong robustness.

Slide - 22: Attack Robustness II

Outdoor experiments up to 30m and at varying incidence angles with three laser powers were also conducted. Quantitative results confirmed improved stability at longer ranges and higher power levels, with attack success above 80% under moderate lighting. Higher power beyond 20m however yields diminishing returns.

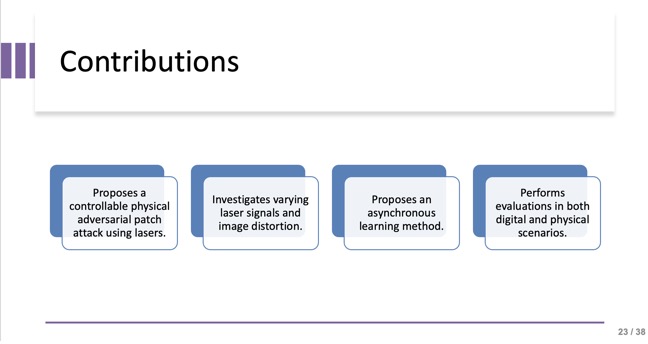

Slide - 23: Contributions

This slide summarizes the four main contributions in their own words namely: controllable laser-triggered patch, novel asynchronous optimization, investigation of laser parameters, and digital and physical validation.

Slide - 24: Limitations

In their own words, they note three drawbacks: reliance on knowing camera refresh rate, limited evaluation scale, and reduced stealth at close or dark conditions.

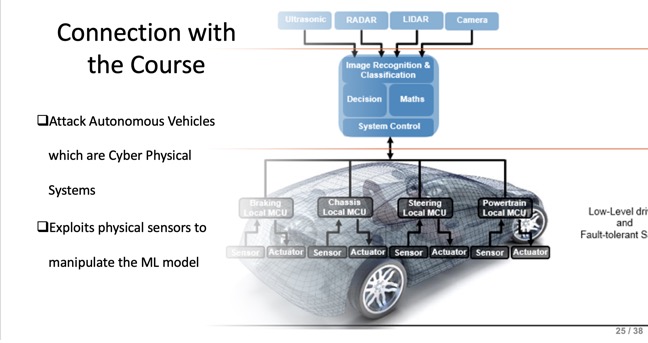

Slide - 25: Connection with the course

This slide links the paper to CPS security by showing the attack targets the ML perception in autonomous vehicles, a core cyber-physical system component.

Slide - 26: Part 2

This slide introduces how later studies build on L-HAWK and the evolving research directions in physical adversarial perception.

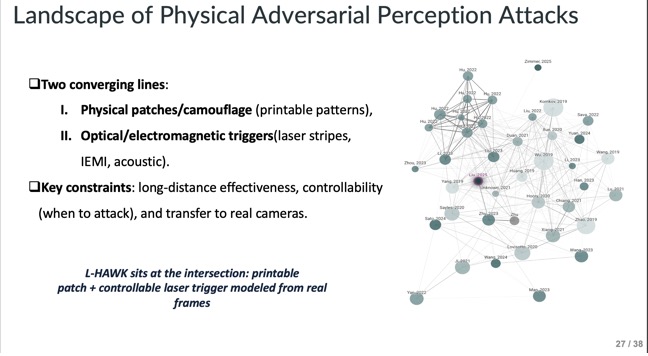

Slide - 27: Landscape of Physical Adversarial Perception Attacks

They show the current landscape by highlighting works on physical patches, optical, and electromagnetic triggers. They also show L-HAWK’s place at the intersection of controllable and long-distance methods.

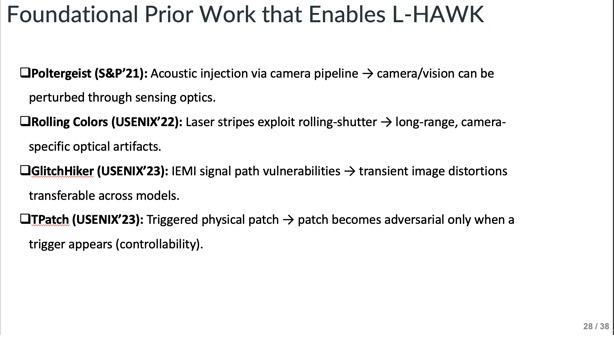

Slide - 28: Foundational Prior Work

This slide lists Poltergeist, Rolling Colors, GlitchHiker, and TPatch as earlier works that inspired controllable or optical adversarial attacks and how they went about it.

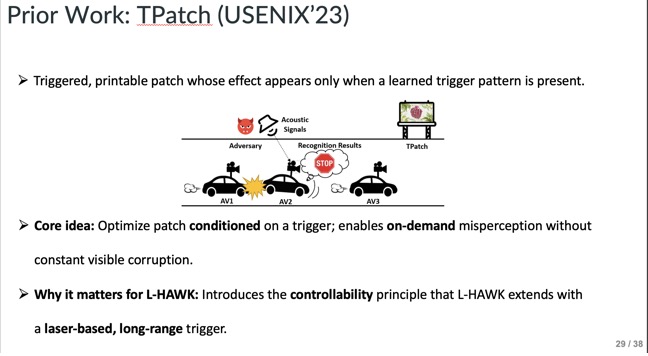

Slide - 29: TPatch

This slide explains TPatch’s acoustic trigger mechanism and how L-HAWK extends it using a laser for longer range and better control.

Slide - 30: Rolling Colors

They describe a prior laser-based color-stripe attack that misleads sign perception at a distance. L-HAWK builds upon its rolling-shutter insights to learn realistic triggers.

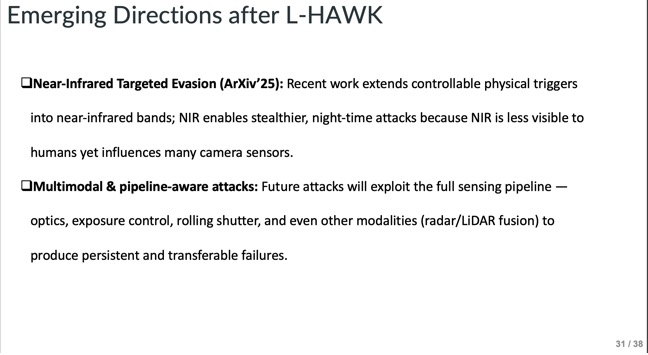

Slide - 31: Emerging Directions

The emerging directions after L-Hawk lean towards future work in near-infrared (NIR) attacks and multimodal (radar/LiDAR) triggers for more stealth and robustness.

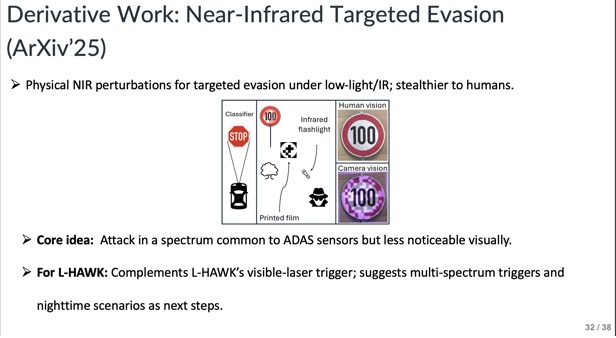

Slide - 32: Derivative Work

Recent research using NIR light for hidden attacks at night extends L-HAWK’s ideas to invisible spectrums. The key idea is to model an attack in a spectrum common to ADAS sensors. It also suggests the use of multi-spectrum triggers.

Slide - 33: Team-Work

They detail each member’s role in this slide with Jordan handling literature review and formatting, and Shafikul focusing on methodology and evaluation analysis.

Slide - 34: Part 3 Q&A

This slide introduced a segment for questions and answers which stimulated discussions about feasibility and implications and the impact L-Hawk has for the future of AVs.

Slide - 35: Part 4 Discussion

The discussion slide opened another interactive section of the presentation inviting class debate on the questions in the following slides.

Slide - 36: Discussion

The class also discussed whether L-HAWK-type attacks could bypass multi-sensor fusion e.g., LiDAR + cameras and how adaptive defense mechanisms might randomize camera exposure to block such attacks.

Future triggers could move beyond lasers and sound into multi-spectrum or cross-modal triggers, such as infrared or electromagnetic interference invisible to humans but detectable by sensors. Researchers may also explore sensor-fusion deception, where coordinated signals subtly mislead multiple sensing modalities simultaneously for more reliable, stealthy activation.

Our group believes Glare and autofocus adjustments most strongly degrade L-HAWK’s effectiveness by changing camera exposure and suppressing the laser-induced stripe. To counter this, attackers could use adaptive power control or synchronized pulsing that compensates for brightness changes and maintains consistent pixel intensity on the sensor.

Slide - 37: Reference

Lists all cited academic works, including Poltergeist, Rolling Colors, TPatch, and NIR-based attacks.

Slide - 38: Final Slide

Closing slide with acknowledgments and end of presentation.